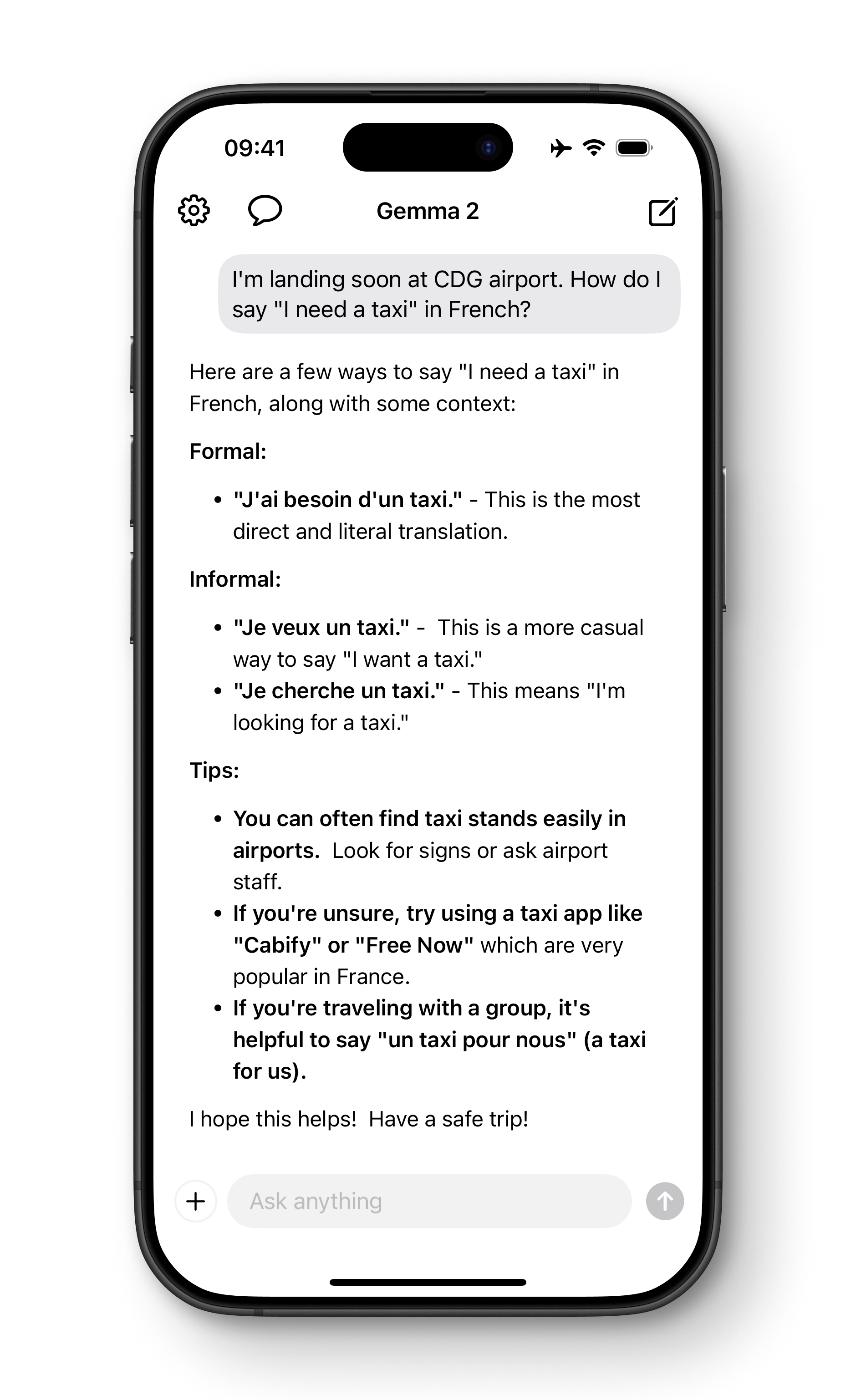

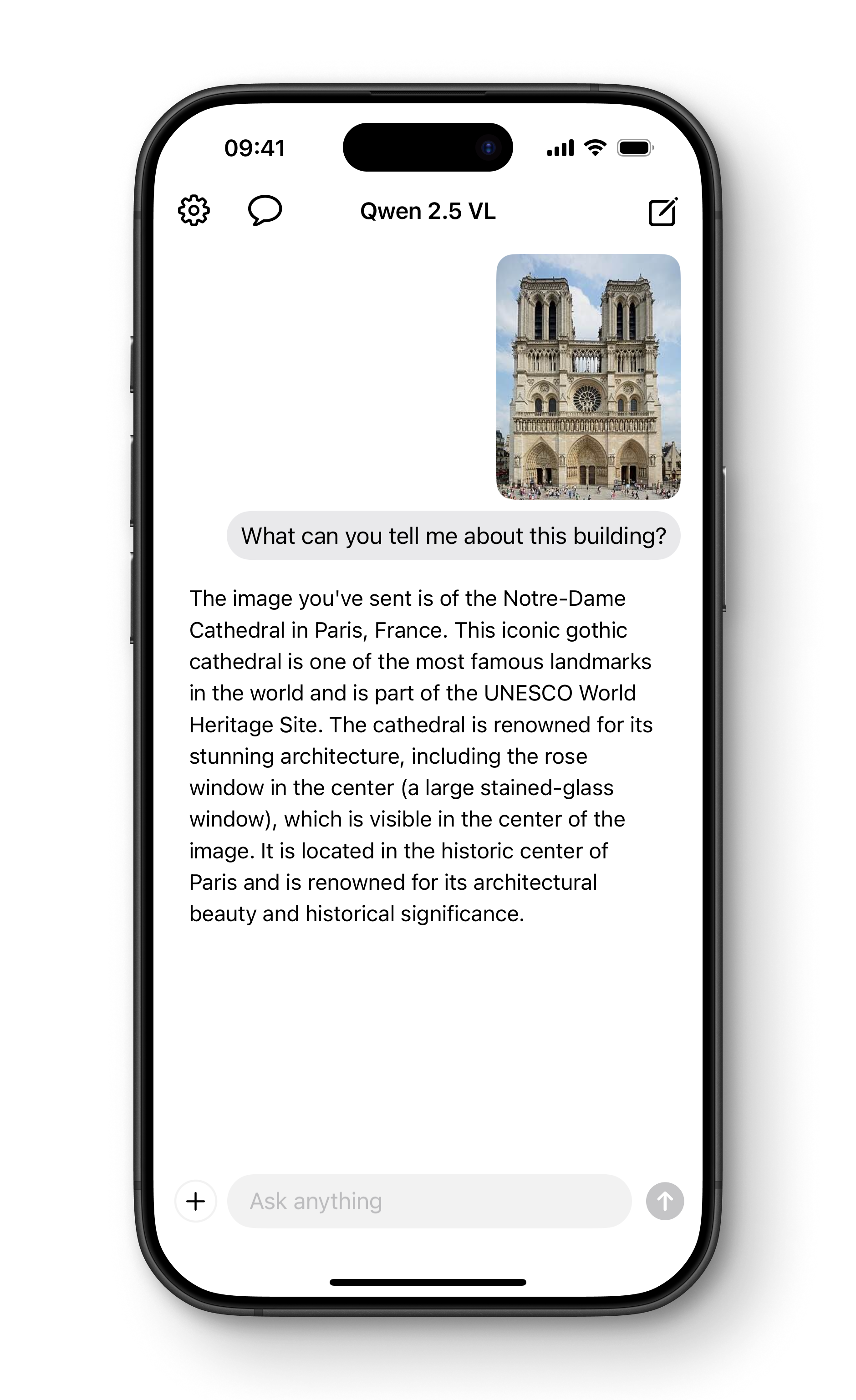

Offline.

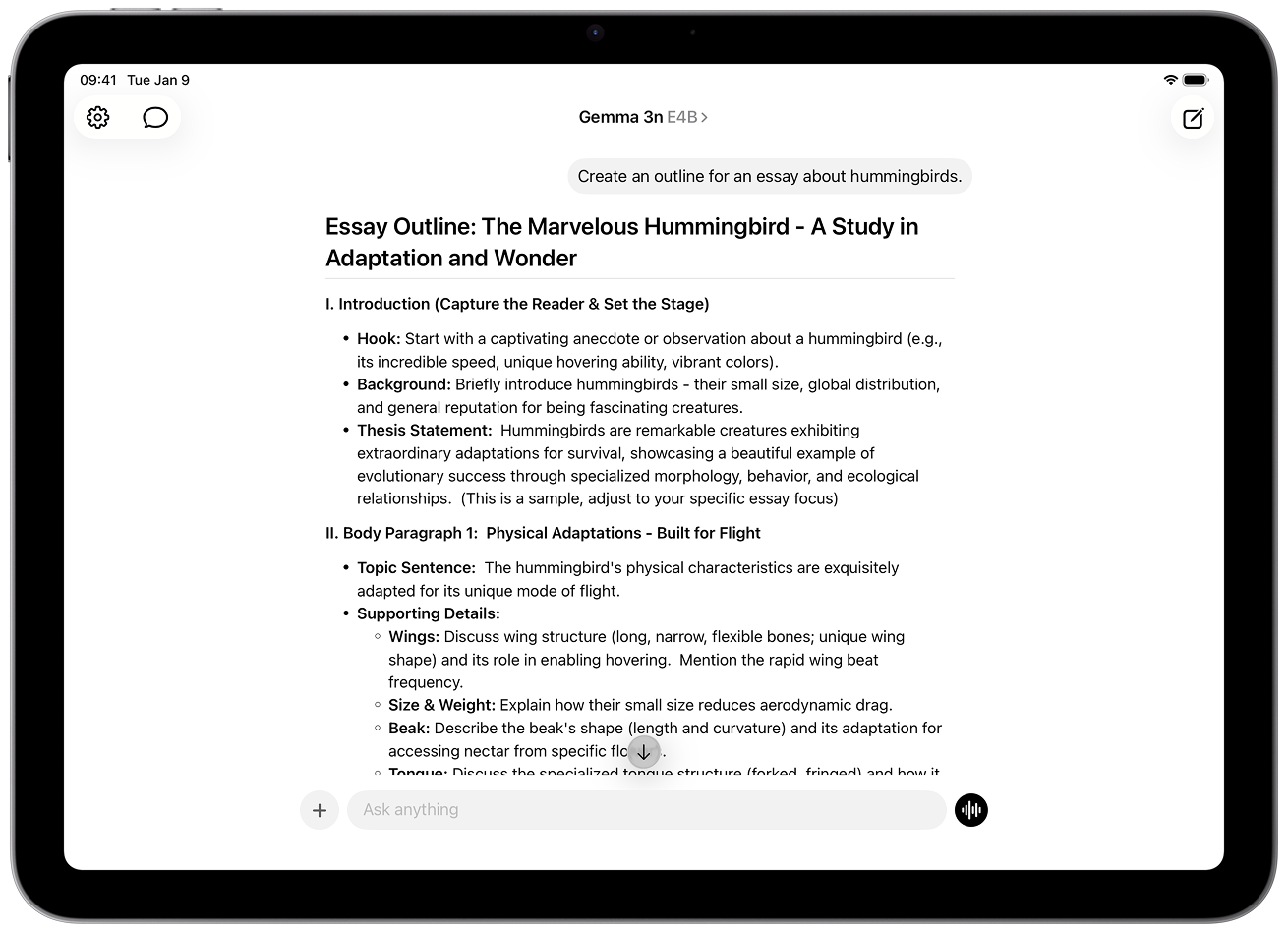

Your personal AI assistant that runs completely offline on your device. No internet connection or login required. Just download a model and start using.

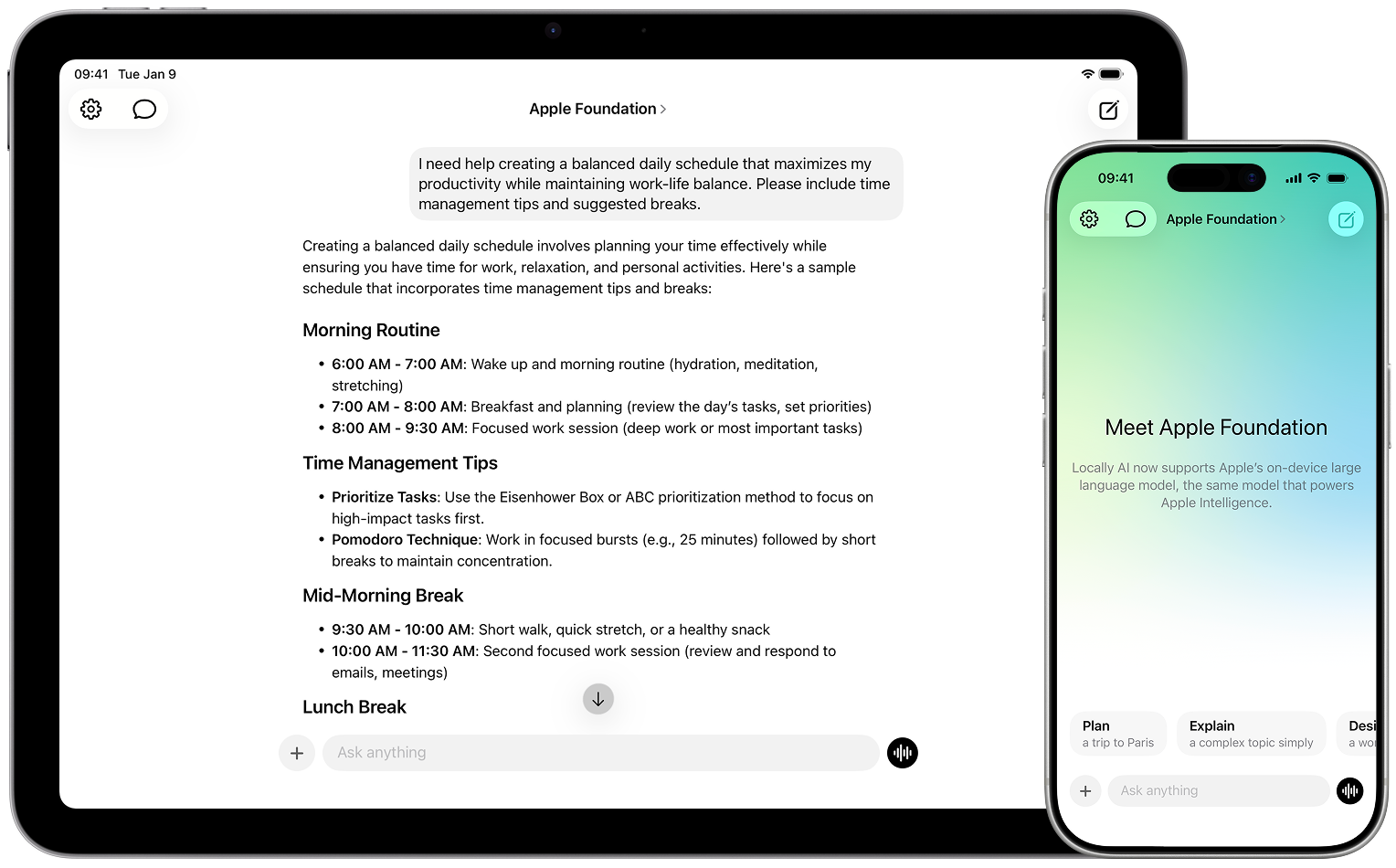

Private & secure.

All processing happens locally on your device. Your data never leaves your control. No cloud processing, no data collection, complete privacy guaranteed.

Apple Silicon Optimized.

Leverages powerful language and vision models specifically optimized for Apple Silicon chips for maximum performance and efficiency.